40

@ U S T . H K

Robots now coming into being in HKUST labs are

like babies. A child initially knows nothing of the

world. But using its five senses – hearing, sight,

touch, taste, and smell – an infant has the capacity

to rapidly learn from what it perceives in the

immediate environment and how that environment

changes in response to its actions.

This is the perception-action cycle, understanding

that is also vital for the development of the next

generation of robots. The new robot may start its

existence as a blank slate. But if, like a child, it has the

capacity to teach and correct itself, it can do much

more than a pre-programmedmachine.

Prof Bertram Shi is leading research where

electronics and computing meet biology and

neuroscience. Prof Shi is renowned in the robotics

community for his work in developing the Active

Efficient Coding (AEC) framework for machine

learning in collaboration with Prof Jochen Triesch’s

team at the Frankfurt Institute for Advanced Studies

in Germany. This framework extends Horace

Barlow’s groundbreaking Efficient

Coding hypothesis, put forward

in 1961 to explain how

the brain works at the

neuronal level.

The brain, Bar-

low hypothesized,

tries to form an

efficient repre-

sentation of the

environment by

switching on as

few neurons as

possible, thus

minimizing the

energy involved.

Prof Shi and his

team realized this

hypothesis was in-

complete, as it assumed

a passive organism, where

GROWING UP

the properties of neurons adapt to the statistics of

sensory input. What was missing from Efficient

Coding was that organisms actively shape and

optimize such statistics through their behavior. The

researchers added the effect of active behavior to

the hypothesis in work first presented at the 2012

IEEE International Conference on Development and

Learning and Epigenetic Robotics.

The team’s AEC framework takes into account

how animals and humans utilize their motor system

to facilitate the efficient encoding of relevant sensory

signals. An example is the simultaneous movement

of both eyes in opposite directions to align the

images from the left and right eyes so that they

can be fused into a coherent percept, also known

as a “vergence eye movement”. The framework is

a powerful unifying principle for the development

of neurally inspired and driven robots. It underpins

technology that will enable robots to become

more adaptive, structure their own behavior

automatically, and predict interventions

that match biological systems they

mimic. Prof Shi’s team has

already shown this single

principle can account

for the emergence

of a wide range of

other behaviors,

such as visual

tracking and

accommodation

(focusing), and

the automatic

combination of

multiple sensory

cues.

Prof Shi

e x p l a i n e d :

“In developmental

robotics, ideally we

want to put a robot in

the environment and let

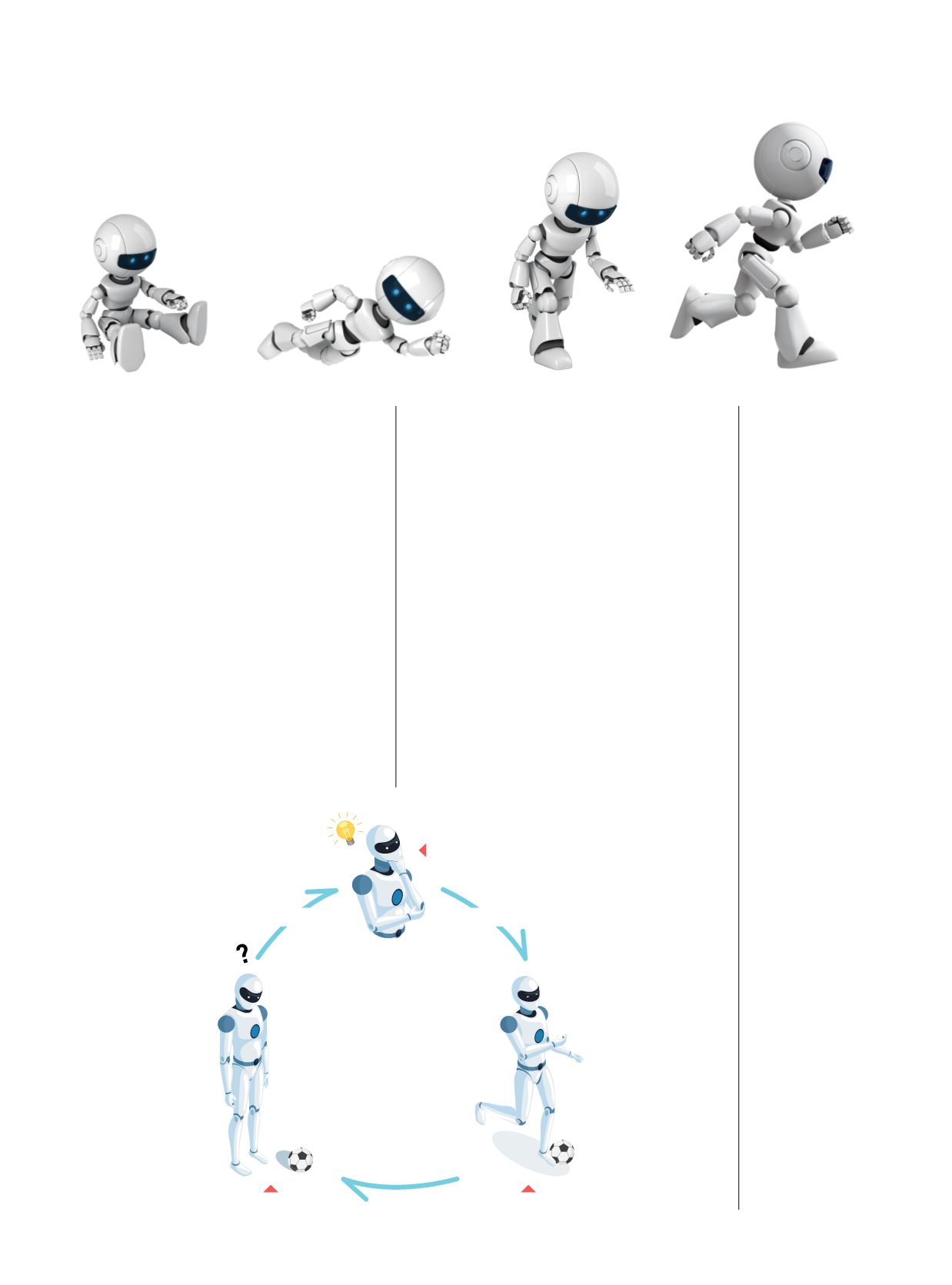

The Active Efficient Coding

(AEC) framework utilizes the

perception-action cycle model

to advance development of

next-generation robots.

PERCEPTION-ACTION

CYCLE

Sensory

representation

in brain

Sensory input

Action

Like a baby,

a robot learns

to predict the

consequences of

its movement

and adapt to the

environment

through repeated

failure and

trying again.

PERCEPTION

BEHAVIOR