34

@ U S T . H K

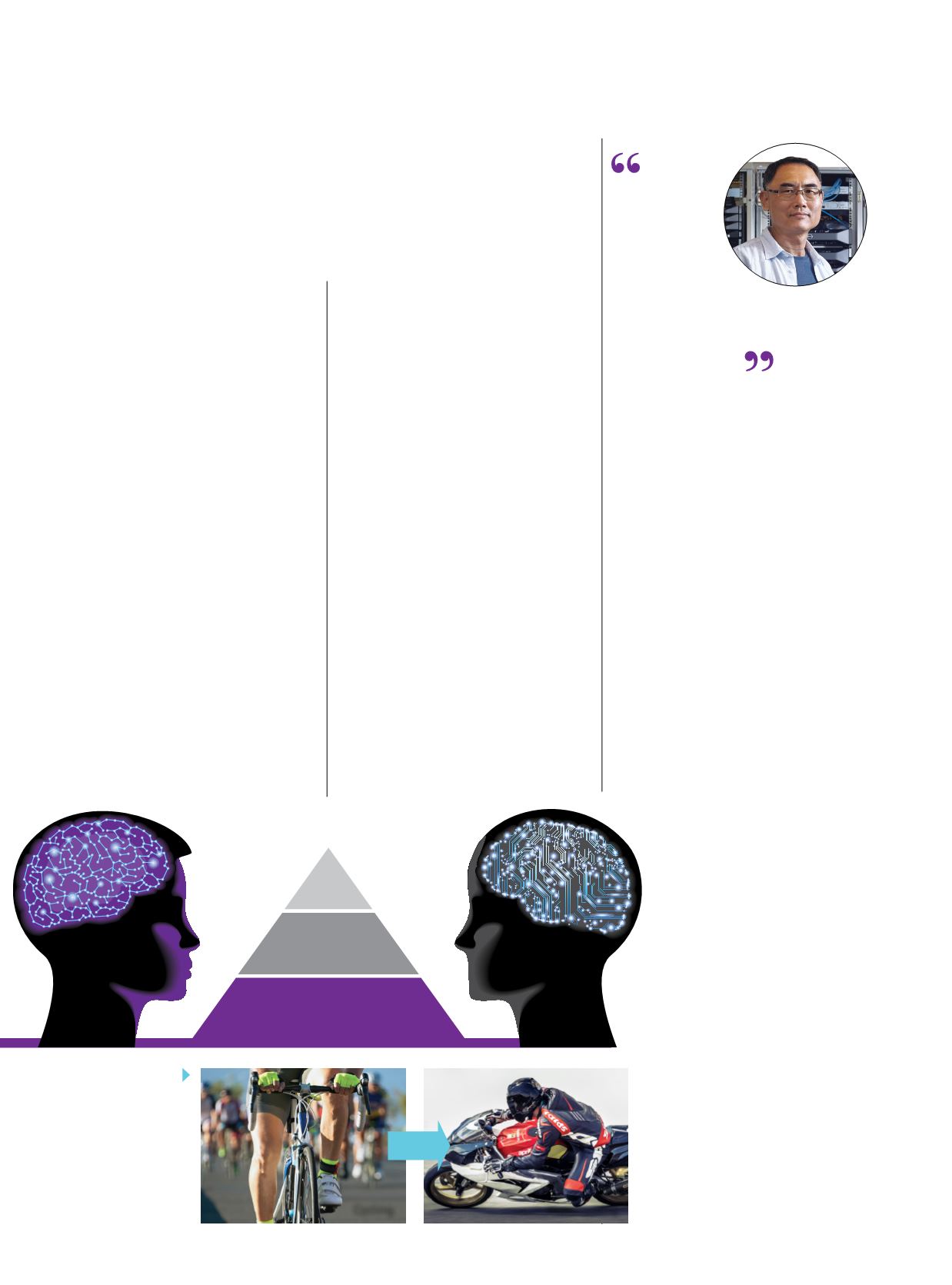

REINFORCEMENT

LEARNING

DEEP LEARNING

TRANSFER LEARNING

FromNumbers to Knowledge

For a person, the ability to recognize

and apply knowledge and skills learned

in previous tasks to new endeavors is a

natural occurrence. After understanding

how one card game works, it is easier

to pick up another. For a computer,

such learning is incredibly hard.

This is the specialist domain of Prof

Qiang Yang, an expert in data

mining, artificial intelligence, machine

learning, transfer learning and deep

reinforcement learning.

Prof Yang has spent 20 years

fathoming algorithms that seek to endow

computers with similar capabilities

to humans in retaining and reusing

previously learned knowledge in order

to “think” and “decide” how to extract

information and patterns from the rivers

of data flooding our digital age. Prof Yang

and his team have improved the accuracy

of computers’ performance through

devising versatile frameworks for such

“transfer learning”. He has developed

Instance-based Transfer Learning, which

uses individual instances

BUILDING

INTELLIGENCE

to bridge different domains, and

Heterogeneous Transfer Learning, where

the computer learns in one knowledge

domain (for example, text) then transfers

what it has learned to a separate or more

difficult domain (for example, images).

Prof Yang has made these frameworks

open source, enabling other researchers

and the field overall to develop at a faster

pace. He was also the first to propose the

use of transfer learning in collaborative

filtering and recommender systems.

Applications have ranged from early

online advertising directed at users

to improvements in recommendation

systems, including a state-of-the-art

recommendation system for ICT global

giant Huawei’s App Store.

Recent research at the WeChat-

HKUST Joint Lab on Artificial Intelligence

Technology (WHAT LAB), set up with

Mainland China internet giant Tencent

in 2015, has inspired a novel application

to improve machine reading capabilities.

Books, news articles, and blogs are used

as input to train a machine learning

model that can produce an

abstract of such readability that it

doesn’t appear to have been written by a

machine. The objective is to assist people

with information overload on social

networks or boost company productivity

by enabling a computer to develop an

abstract of a long report or integrate data

and highlight the main points the reader

needs to know. By reading books by the

same author, the demonstration model

designed by Prof Yang and his students

has even written a high-quality novel of

its own in the writer’s style, taking just a

few seconds to do so.

In improving such machine reading

abilities, Prof Yang’s team has

become the first to integrate a

reinforcement learning algorithm

that leverages users’ feedback

related to positive comments

on prior abstracts with transfer

learning and deep learning

(recurrent neural networks) to

help the computer make a more

intelligent decision on what abstract

to generate. The innovation has

improved the quality substantially.

With information to hand quicker, it

can also speed up report-writing as

well as learning.

“The work at HKUST seeks to

increase the knowledge we can get

from data by making the process

of moving from data source to

understanding faster and more

efficient, accurate and useful to

people,” Prof Yang said.

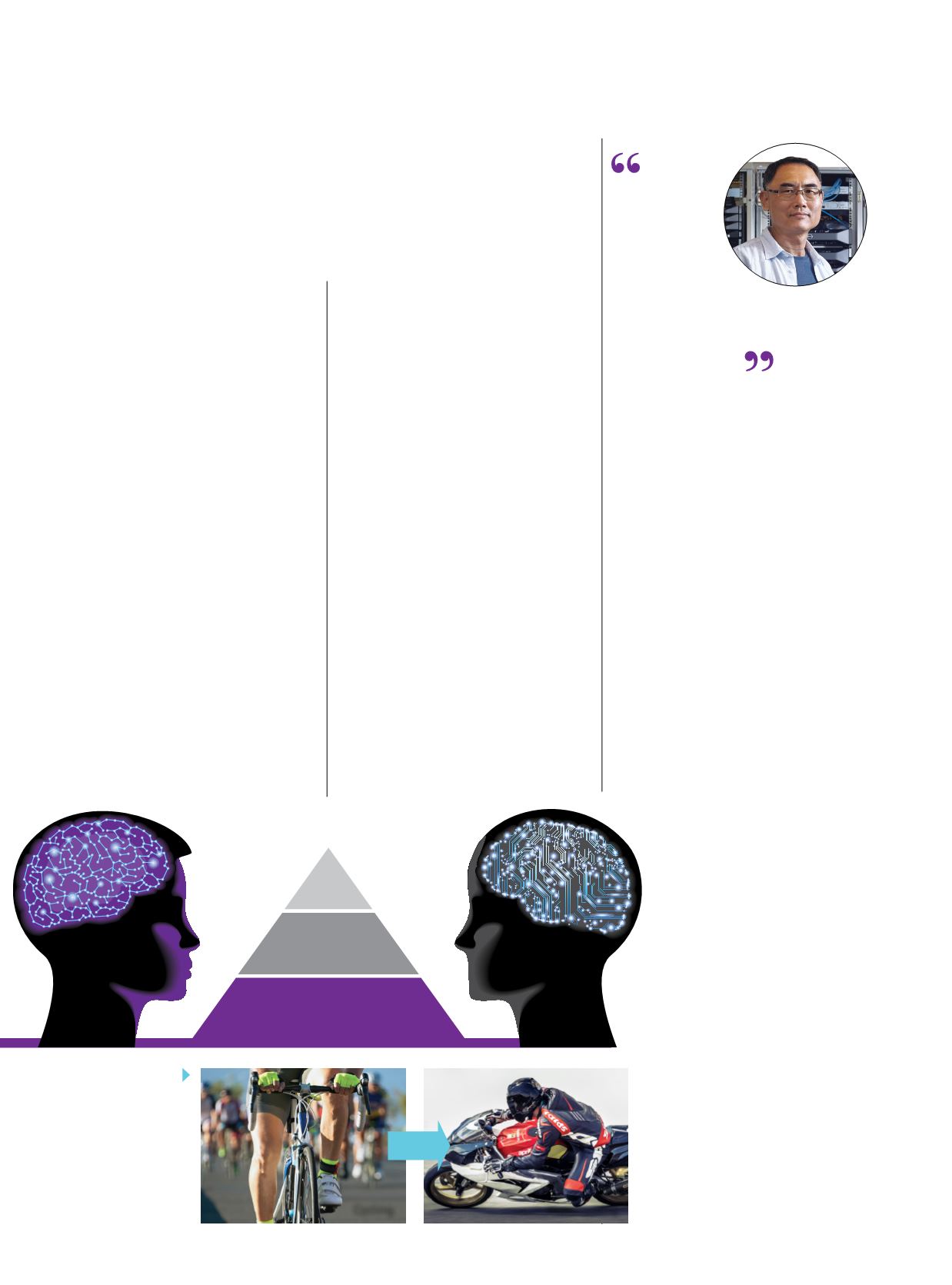

Cycling

Motorcycling

Humans have the ability

to apply knowledge and

skills learned in previous

tasks (e.g. cycling) to new

tasks (e.g. motorcycling).

Prof Yang’s transfer

learning algorithms help

computers to acquire

the ability to retain and

transfer knowledge from

a source domain to

a target domain.

We are

inventors,

always

thinking of

how to use data

in a new way

PROF QIANG YANG

New Bright Professor of Engineering,

Head, Department of Computer Science

and Engineering,

Director, HKUST Big Data Institute,

Inaugural Editor-in-Chief of

IEEE Transactions on Big Data

Prof Yang combines three levels of machine learning techniques

to achieve highly innovative machine reading capabilities.